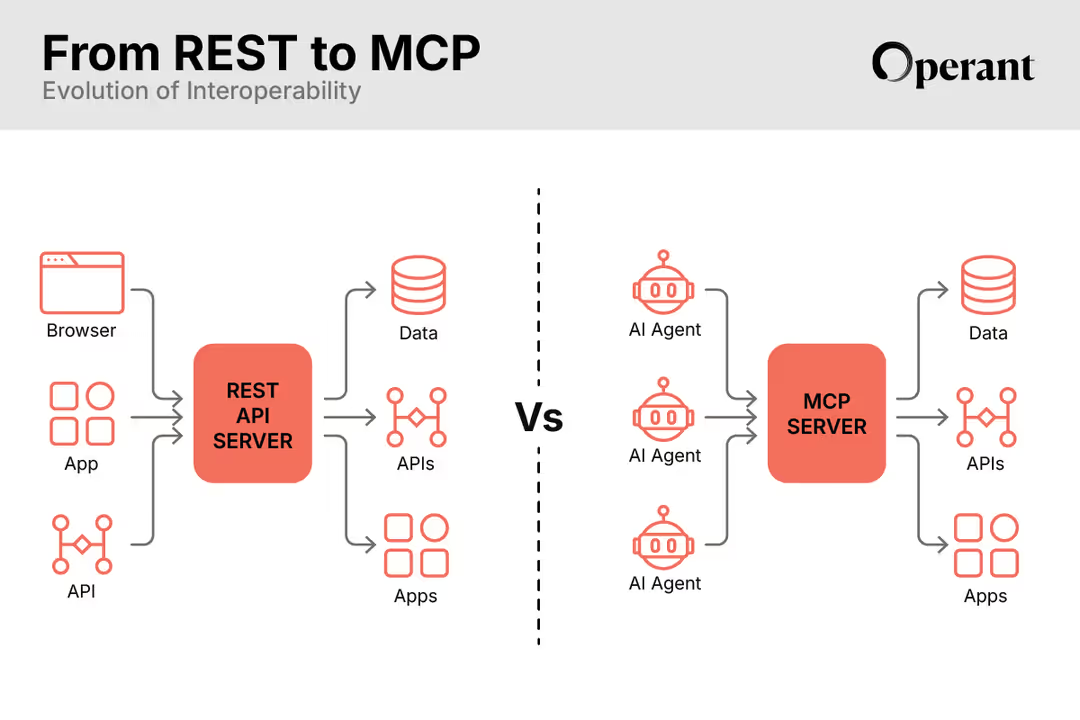

Operant's Active Approach to AI Security

AI applications don’t operate in isolation. They need to be secured in the context of your entire cloud application stack. Operant’s 3D Runtime Defense provides real-time security across every cluster and every cloud, from infra to APIs.

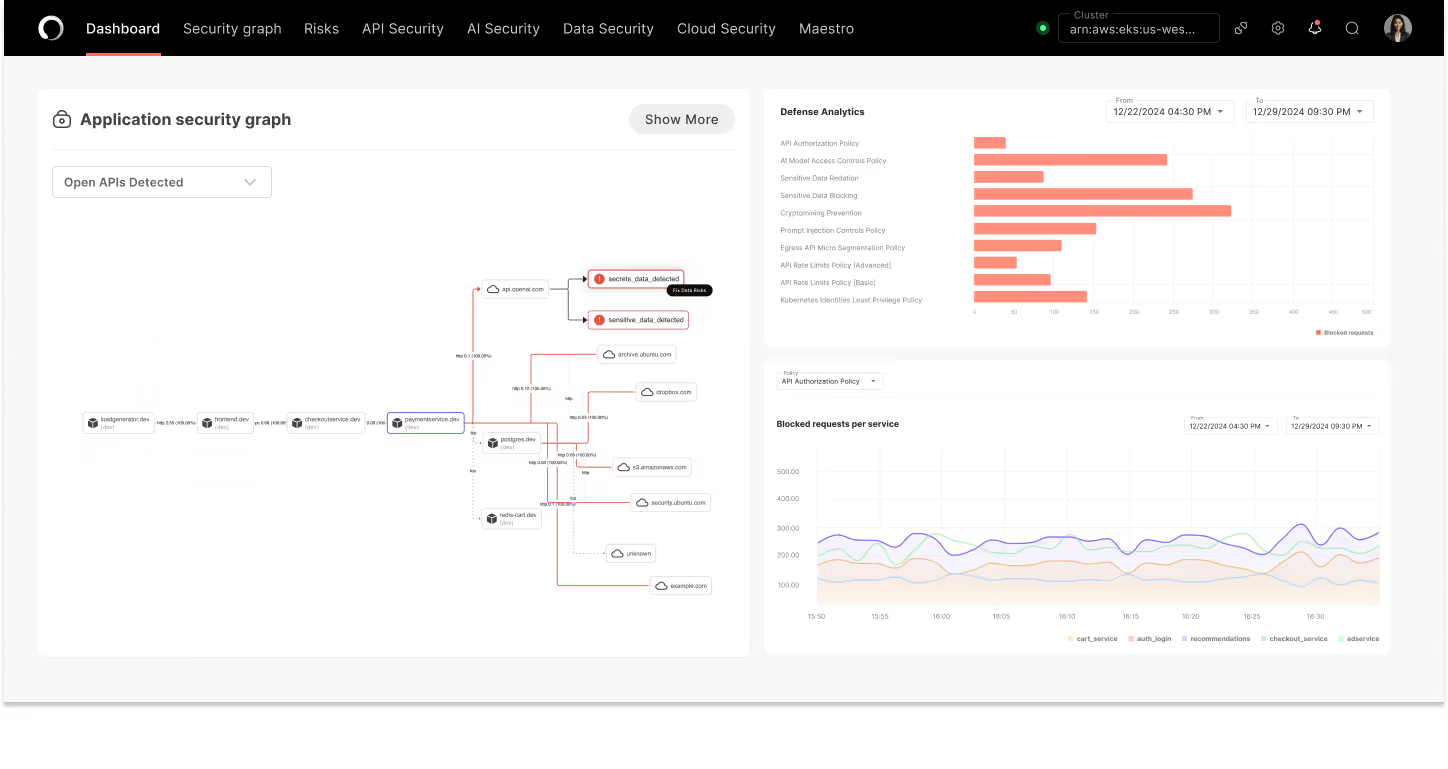

Full AI Application Visibility

Gain full visibility into every live AI interaction within your application environment so you can confidently manage AI-driven data flows and compliance needs.

AI Threat Detection

Detect and prioritize AI-specific risks like prompt injection, LLM poisoning, model theft, and sensitive data leakage. Identify and proactively block threats that actually impact your application.

Active Defense & Runtime Enforcement

Take immediate action against security risks with automated in-line defenses including In-Line Auto-Redaction and Obfuscation of sensitive and PII data.

Operant secures all your GenAI, LLM, RAG and more

Enterprise Ready AI Security

Security That Fuels Development Speed

Operant enables you to innovate faster with secure-by-default applications, eliminating the operational burden of lengthy engineering projects.

Single-Step, Zero-Instrumentation Deployment

Deploy in minutes without the need for complex integrations or instrumentation, so you can see value immediately without impacting workflows.

Purpose-Built for Cloud-Native AI Environments

Operant integrates seamlessly into Kubernetes and other cloud-native infrastructure, enabling proactive, frictionless defense.

Skip the POC.

Deploy Operant Today.

Single step helm install.

Zero instrumentation. Zero Integrations.

Works in <5 minutes.

AI Application Protection Fuels Fast and Responsible AI Development

Secure Your Entire AI Application Stack

Reduce Costs & Tooling Overload

Do More With Less

Scale AI Applications Faster

Operant discovers and blocks the Top OWASP LLM Risks

OWASP LLM Security Risks

Operant:

Prompt Injection

This manipulates a large language model (LLM) through crafty inputs, causing unintended actions by the LLM. Direct injections overwrite system prompts, while indirect ones manipulate inputs from external sources.

Insecure Output Handling

This vulnerability occurs when an LLM output is accepted without scrutiny, exposing backend systems. Misuse may lead to severe consequences like XSS, CSRF, SSRF, privilege escalation, or remote code execution.

Training Data Poisoning

This occurs when LLM training data is tampered, introducing vulnerabilities or biases that compromise security, effectiveness, or ethical behavior. Sources include Common Crawl, WebText, OpenWebText, & books.

Model Denial of Service

Attackers cause resource-heavy operations on LLMs, leading to service degradation or high costs. The vulnerability is magnified due to the resource-intensive nature of LLMs and unpredictability of user inputs.

Supply Chain Vulnerabilities

LLM application lifecycle can be compromised by vulnerable components or services, leading to security attacks. Using third-party datasets, pre- trained models, and plugins can add vulnerabilities.

Sensitive Information Disclosure

LLMs may inadvertently reveal confidential data in its responses, leading to unauthorized data access, privacy violations, and security breaches. It’s crucial to implement data sanitization and strict user policies to mitigate this.

Insecure Plugin Design

LLM plugins can have insecure inputs and insufficient access control. This lack of application control makes them easier to exploit and can result in consequences like remote code execution.

Excessive Agency

LLM-based systems may undertake actions leading to unintended consequences. The issue arises from excessive functionality, permissions, or autonomy granted to the LLM-based systems.

Model Theft

This involves unauthorized access, copying, or exfiltration of proprietary LLM models. The impact includes economic losses, compromised competitive advantage, and potential access to sensitive information.

3%20%3D(Art)Kubed%20(16%20x%209%20in)%20(7)-p-1080.avif)