3D Runtime Defense

In the Age of AI, Active Runtime Defense across every layer is the only way to stop the most critical and "unstoppable" attacks.

New vulnerabilities need new solutions

Cloud adoption has expanded attack surface while security enforcement still falls dangerously behind

60%

93%

55%

Of data breaches in 2022 were caused by known vulnerabilities awaiting a patch

Of cloud-native companies experienced at least one security incident in their Kubernetes environment in the last 12 months

Of cloud engineering teams have delayed or slowed down application deployment due to security concerns

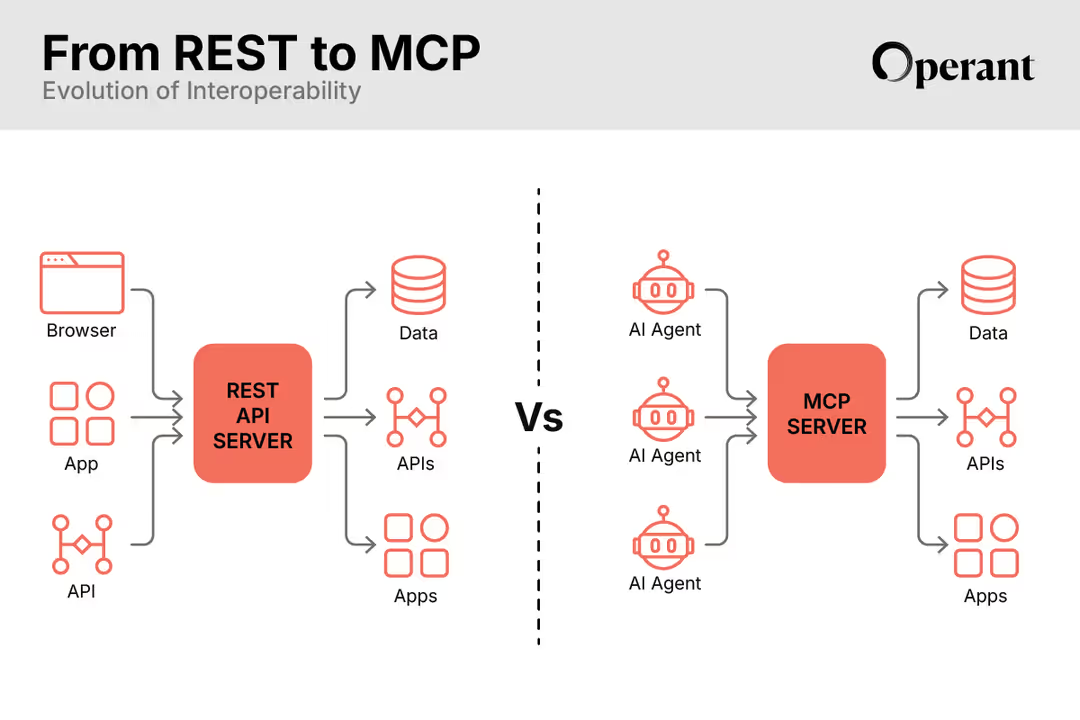

Discover

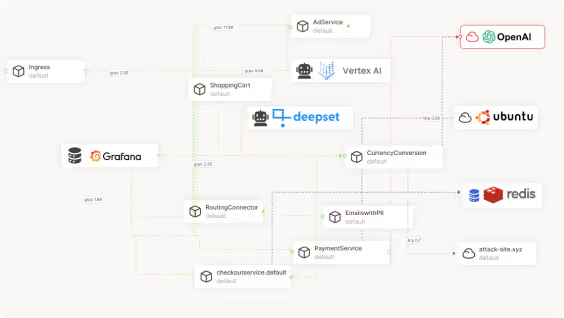

Instantly map out AI models, APIs, and dependencies in real-time

Continuously uncover shadow AI data flows, ghost APIs, and third-party interactions

Track and analyze all AI providers and third-party models, including OpenAI, Cohere, Bedrock, and more

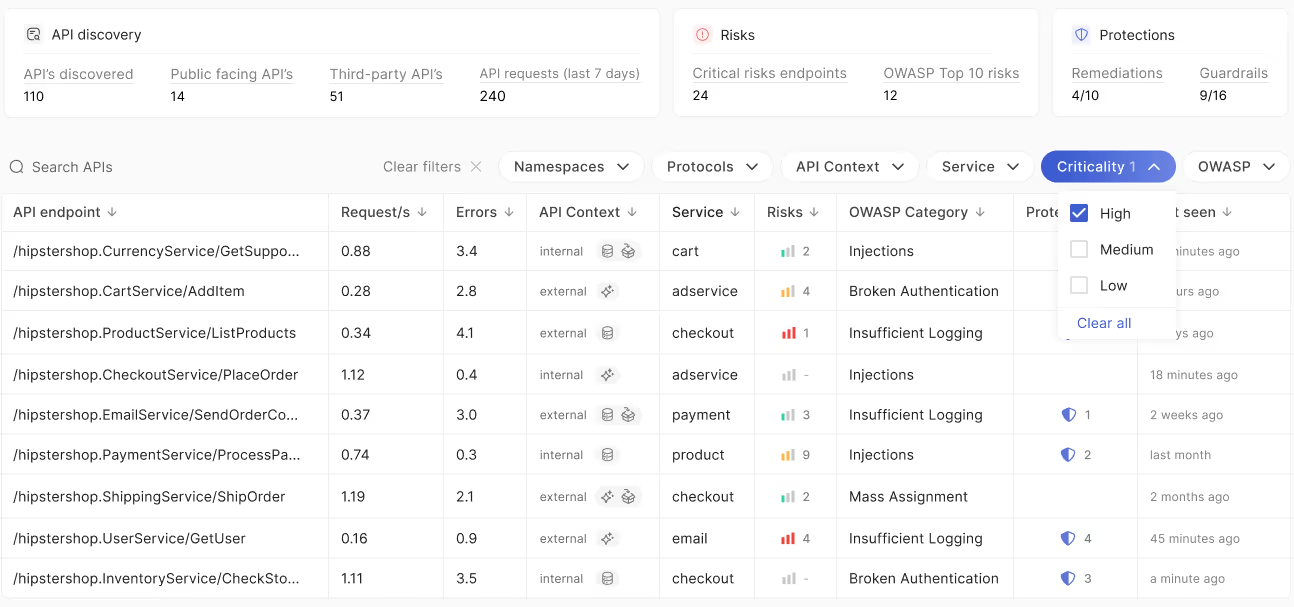

Detect

Detect and prioritize AIspecific risks like prompt injection, data poisoning, model theft, and sensitive data leakage.

Runtime detection of overprivileged access via prompt injections or jailbreaks.

Monitor ingress and egress data flows to prevent unauthorized access of critical data like PII, PCI, PHI, API keys, and more.

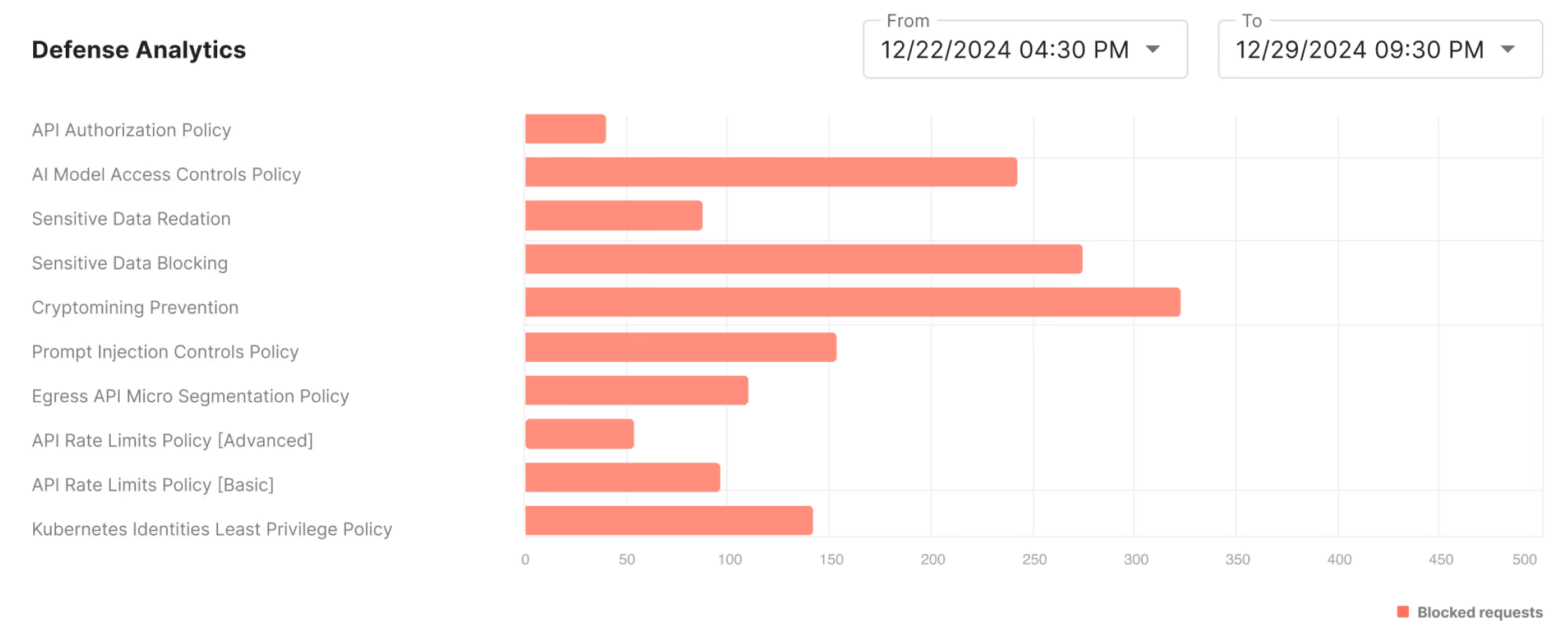

Defend

Automatically redact and block sensitive data flows, safeguarding data privacy by default.

Isolate suspicious third-party containers and AI models to prevent malicious activity.

Limit sensitive API calls, including AI endpoint usage,with rate-limiting and token enforcement.

If they had been using Operant’s secure-by-default guardrails and in-line auto-redaction of sensitive data, this company would have completely avoided the data breach. In-line auto-redaction would have prevented any PII data from being sent outside of the native application environment, while allowing the model to function as desired. In parallel, any data leakage at egress would have been automatically flagged and blocked with Operant’s sensitive data protection guardrails, while the ghost API and unexpected data flow would have been instantly discovered, detected, and a remediation recommended via Operant’s API threat protection policies and Adaptive Internal Firewalls. Once the remediation was accepted with a single click, new guardrails would have been put in place and Operant’s Adaptive Internal Firewalls would have blocked all other data leakage proactively moving forward.

Are you ready for the wild wild west?

A Fintech company using an LLM-based Fraud Detection app started feeding new user application records into their model via exchanges with Open AI’s API. Over time, PII data of the user records they were using began to leak, but they didn’t notice the data breach because they were not monitoring their data at egress, where a ghost API was piggy-backing off of the Open AI API calls. Because the company did not have runtime detection or active defense, the data leakage continued until the volume of records became so substantial that it was flagged via logging in the SIEM, and at that point over 10,000 SSNs had already been stolen, resulting in over $50m in data breach costs.

3%20%3D(Art)Kubed%20(16%20x%209%20in)%20(7)-p-1080.avif)