Enforcing Zero Trust for AI APIs with Operant’s Adaptive Internal Firewalls

Evaluate your spending

Imperdiet faucibus ornare quis mus lorem a amet. Pulvinar diam lacinia diam semper ac dignissim tellus dolor purus in nibh pellentesque. Nisl luctus amet in ut ultricies orci faucibus sed euismod suspendisse cum eu massa. Facilisis suspendisse at morbi ut faucibus eget lacus quam nulla vel vestibulum sit vehicula. Nisi nullam sit viverra vitae. Sed consequat semper leo enim nunc.

- Lorem ipsum dolor sit amet consectetur lacus scelerisque sem arcu

- Mauris aliquet faucibus iaculis dui vitae ullamco

- Posuere enim mi pharetra neque proin dic elementum purus

- Eget at suscipit et diam cum. Mi egestas curabitur diam elit

Lower energy costs

Lacus sit dui posuere bibendum aliquet tempus. Amet pellentesque augue non lacus. Arcu tempor lectus elit ullamcorper nunc. Proin euismod ac pellentesque nec id convallis pellentesque semper. Convallis curabitur quam scelerisque cursus pharetra. Nam duis sagittis interdum odio nulla interdum aliquam at. Et varius tempor risus facilisi auctor malesuada diam. Sit viverra enim maecenas mi. Id augue non proin lectus consectetur odio consequat id vestibulum. Ipsum amet neque id augue cras auctor velit eget. Quisque scelerisque sit elit iaculis a.

Have a plan for retirement

Amet pellentesque augue non lacus. Arcu tempor lectus elit ullamcorper nunc. Proin euismod ac pellentesque nec id convallis pellentesque semper. Convallis curabitur quam scelerisque cursus pharetra. Nam duis sagittis interdum odio nulla interdum aliquam at. Et varius tempor risus facilisi auctor malesuada diam. Sit viverra enim maecenas mi. Id augue non proin lectus consectetur odio consequat id vestibulum. Ipsum amet neque id augue cras auctor velit eget.

Plan vacations and meals ahead of time

Massa dui enim fermentum nunc purus viverra suspendisse risus tincidunt pulvinar a aliquam pharetra habitasse ullamcorper sed et egestas imperdiet nisi ultrices eget id. Mi non sed dictumst elementum varius lacus scelerisque et pellentesque at enim et leo. Tortor etiam amet tellus aliquet nunc eros ultrices nunc a ipsum orci integer ipsum a mus. Orci est tellus diam nec faucibus. Sociis pellentesque velit eget convallis pretium morbi vel.

- Lorem ipsum dolor sit amet consectetur vel mi porttitor elementum

- Mauris aliquet faucibus iaculis dui vitae ullamco

- Posuere enim mi pharetra neque proin dic interdum id risus laoreet

- Amet blandit at sit id malesuada ut arcu molestie morbi

Sign up for reward programs

Eget aliquam vivamus congue nam quam dui in. Condimentum proin eu urna eget pellentesque tortor. Gravida pellentesque dignissim nisi mollis magna venenatis adipiscing natoque urna tincidunt eleifend id. Sociis arcu viverra velit ut quam libero ultricies facilisis duis. Montes suscipit ut suscipit quam erat nunc mauris nunc enim. Vel et morbi ornare ullamcorper imperdiet.

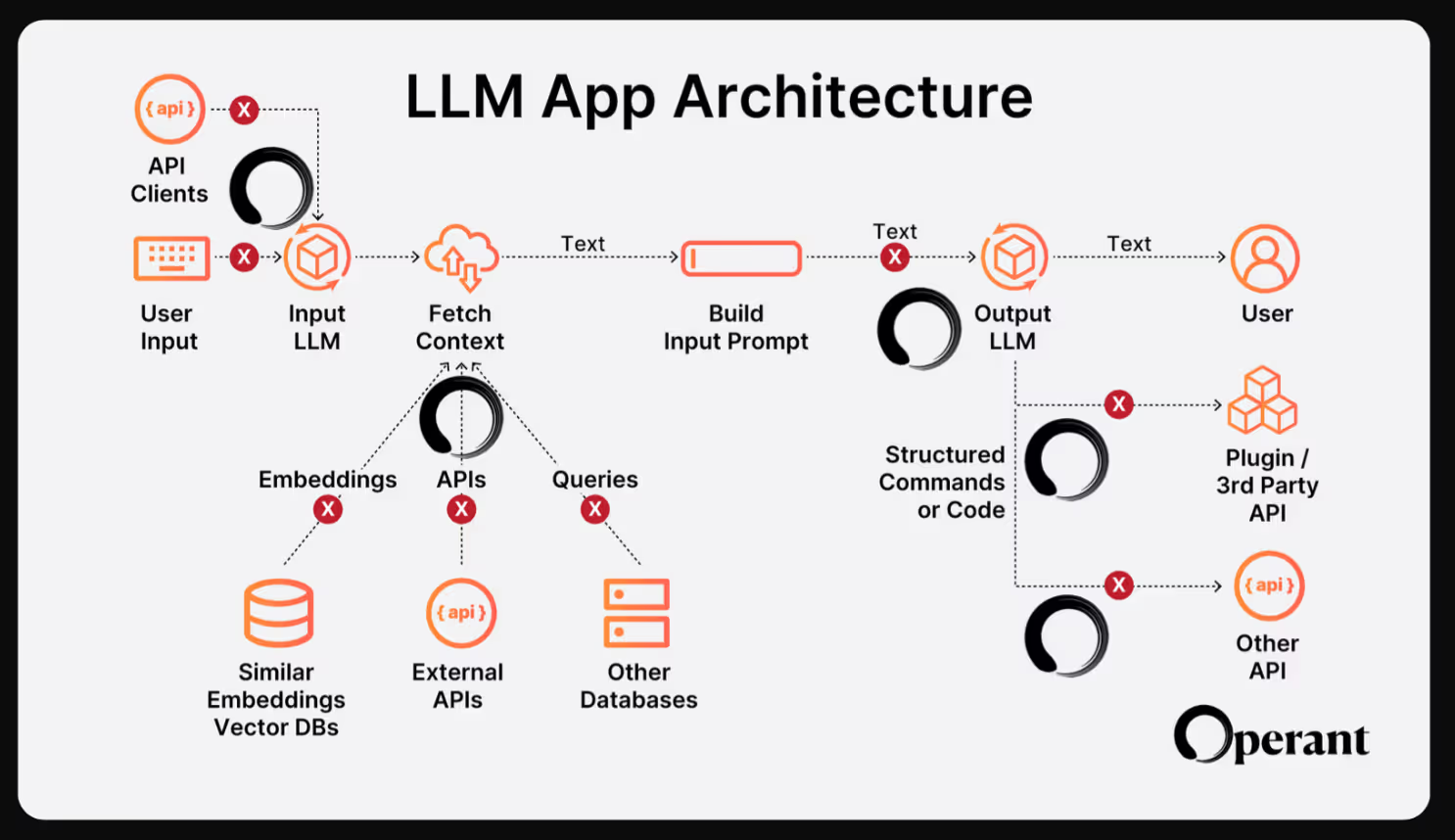

Today, we begin a series on Operant’s new cutting edge AI security capabilities, starting with Adaptive Internal Firewalls (AIFs). Operant’s Adaptive Internal Firewalls operate inside the application perimeter and enforce Zero Trust for AI across all layers of the AI application stack from models to APIs, without getting in the way of desired traffic flows or slowing down development.

How urgent is Zero Trust for AI Applications really?

TL;DR - Pretty urgent… Scary urgent. And fully achievable without getting in the way of progress (really!).

Before the current AI revolution, teams adopting AI would train internal models on internal data before deploying them live, and the live models in production usually didn’t have access to internal organizational data or APIs. However, with LLM-based apps, teams no longer have to build the foundational models themselves, and instead adopt them from third parties using APIs. While they rapidly tune them with their own data (making them feel like a home-grown app) and then quickly deploy them in production, these third party LLM apps that run live in production are actually still foreign entities at their core with insecure connections to the outside world that have access to large amounts of organizational data and APIs to enhance prompts or take action as agents.

This is actually a pretty scary place for the world of AI development to be in from a security perspective, but it doesn’t have to be. AI apps are here to stay, and at Operant, we are proud to accelerate Responsible AI Development by bringing Zero Trust security to AI Applications in a way that can be deployed in minutes (not days, weeks, months or years) so that the security that we all need can keep pace with the innovation we all desire.

Third party software and API risks were already on the rise due to an increase in API key leakage, data leakage, and weak access controls. But that threat and its potential consequences are exponentially greater in the Age of AI now that this third party risk is residing inside Kubernetes clusters as a black box with keys to all of the organizational data as well as having the ability to take actions using other 3rd party agents and APIs (also black boxes).

Take, for example, a new AI app that a team may develop for fraud detection using an open source ML model that connects to OpenAI and HuggingFace. In order for this AI app to work, it needs to have access to the user PII data (sitting in an S3 bucket) that it is analyzing for signs of fraud, yet that interaction between the insecure model and the PII data that’s necessary for it to do its important task also creates major new risks, since the default architecture for 3rd party APIs is to allow unblocked open access to the internet. This is prime territory for a data exfiltration attack as the PII data can be easily leaked to a malicious outsider, and is made even riskier if the company running the model is sending unsanitized PII data directly to OpenAI’s API (a practice that is not recommended from a Responsible AI perspective, but happens surprisingly often in the real world). This new app needs fine-grained Zero Trust access controls that will allow only the right traffic through while blocking unauthorized access, not just at the WAF, but within the application itself. In order to do this in a modern architecture, Zero Trust policies must be based on relevant cloud-native identities, rather than just networking layer IPs that don’t work within ephemeral Kubernetes, and they must scale without drift as the application footprint grows. And, as GenAI based attack patterns change constantly, static code scanning or container scanning break down completely the longer your app is running. You need to keep track of runtime behavioral indicators of data leakage and data exfiltration all the time, both of which can easily be detected when the right controls and runtime visibility are in place from the get-go.

Security teams have been fighting blind with the incredibly complex challenge of trying to figure out how to manage these untrusted black boxes without holding back business-critical functionality, and now it is time to take the reins to bring AI applications up to the standards expected by teams, leaders, and end-users.

Adaptive Internal Firewalls to the Rescue

When it comes to security, you can’t secure what you can’t see. So the first thing that Operant’s Zero Trust AI Application Protection does is bring insights into:

- What are the 3rd party AI providers in use?

- What 3rd party AI APIs are in use, and what does their usage look like?

- What is the overall risk to organization data in terms of AI API usage patterns?

- Which internal apps and services are communicating with 3rd party AI API providers? - so that teams can assess risks end to end.

However, as all security engineers everywhere know only too well, visibility alone does not mean protection.

Security teams can now easily apply Operant’s AI API security policy bundle and define policies like always enforcing TLS vs unencrypted connections for any external communication with 3rd party AI APIs, or applying identity based segmentation policies that define which internal service identities can talk to which external 3rd party APIs and services and block all other accesses. These policies are recommended by Operant based on your real-world application traffic flow and live risk profile, and can be applied and enforced to your environment directly through the Operant interface using a drag-and-drop workflow, so that no instrumentation and no code fixes are required to get the protection you need to fully secure your AI apps today.

We’re also proud to offer an additional layer of value for companies that operate in highly regulated industries such as banks and other financial institutions, so that as they accelerate their use of AI for business-critical functionality like fraud detection and risk modeling, Operant’s enforcement of Zero Trust controls seamlessly proves and maintains regulatory compliance with standards like NYDFS that require companies to monitor and control all the “data in use” as it flows through internal and egress networks.

Operant’s visibility into the live application traffic flows and the protection provided by Adaptive Internal Firewalls in enforcing Zero Trust access controls save teams months of mind-numbing manual work to meet aggressive regulatory deadlines, while making applications more secure and removing barriers to accelerated AI adoption.

Operant’s Zero Trust for AI controls including our Adaptive Internal Firewalls are readily available for use in the product today.

Experience Operant’s Full Circle AI Security Suite for Yourself

Sign up for a trial to experience the power and the ease of Operant’s complete application protection for yourself.

Zero instrumentation. Zero integrations. Works in <5 minutes.

Operant is proud to be SOC 2 Type II Compliant and a contributing member of CNCF and the OWASP Foundation.

3%20%3D(Art)Kubed%20(16%20x%209%20in)%20(7)-p-1080.avif)

.png)

.png)