Application Security Then and Now: Keymakers, Sinking Ships, and the Matrix

Evaluate your spending

Imperdiet faucibus ornare quis mus lorem a amet. Pulvinar diam lacinia diam semper ac dignissim tellus dolor purus in nibh pellentesque. Nisl luctus amet in ut ultricies orci faucibus sed euismod suspendisse cum eu massa. Facilisis suspendisse at morbi ut faucibus eget lacus quam nulla vel vestibulum sit vehicula. Nisi nullam sit viverra vitae. Sed consequat semper leo enim nunc.

- Lorem ipsum dolor sit amet consectetur lacus scelerisque sem arcu

- Mauris aliquet faucibus iaculis dui vitae ullamco

- Posuere enim mi pharetra neque proin dic elementum purus

- Eget at suscipit et diam cum. Mi egestas curabitur diam elit

Lower energy costs

Lacus sit dui posuere bibendum aliquet tempus. Amet pellentesque augue non lacus. Arcu tempor lectus elit ullamcorper nunc. Proin euismod ac pellentesque nec id convallis pellentesque semper. Convallis curabitur quam scelerisque cursus pharetra. Nam duis sagittis interdum odio nulla interdum aliquam at. Et varius tempor risus facilisi auctor malesuada diam. Sit viverra enim maecenas mi. Id augue non proin lectus consectetur odio consequat id vestibulum. Ipsum amet neque id augue cras auctor velit eget. Quisque scelerisque sit elit iaculis a.

Have a plan for retirement

Amet pellentesque augue non lacus. Arcu tempor lectus elit ullamcorper nunc. Proin euismod ac pellentesque nec id convallis pellentesque semper. Convallis curabitur quam scelerisque cursus pharetra. Nam duis sagittis interdum odio nulla interdum aliquam at. Et varius tempor risus facilisi auctor malesuada diam. Sit viverra enim maecenas mi. Id augue non proin lectus consectetur odio consequat id vestibulum. Ipsum amet neque id augue cras auctor velit eget.

Plan vacations and meals ahead of time

Massa dui enim fermentum nunc purus viverra suspendisse risus tincidunt pulvinar a aliquam pharetra habitasse ullamcorper sed et egestas imperdiet nisi ultrices eget id. Mi non sed dictumst elementum varius lacus scelerisque et pellentesque at enim et leo. Tortor etiam amet tellus aliquet nunc eros ultrices nunc a ipsum orci integer ipsum a mus. Orci est tellus diam nec faucibus. Sociis pellentesque velit eget convallis pretium morbi vel.

- Lorem ipsum dolor sit amet consectetur vel mi porttitor elementum

- Mauris aliquet faucibus iaculis dui vitae ullamco

- Posuere enim mi pharetra neque proin dic interdum id risus laoreet

- Amet blandit at sit id malesuada ut arcu molestie morbi

Sign up for reward programs

Eget aliquam vivamus congue nam quam dui in. Condimentum proin eu urna eget pellentesque tortor. Gravida pellentesque dignissim nisi mollis magna venenatis adipiscing natoque urna tincidunt eleifend id. Sociis arcu viverra velit ut quam libero ultricies facilisis duis. Montes suscipit ut suscipit quam erat nunc mauris nunc enim. Vel et morbi ornare ullamcorper imperdiet.

Whether you are a fan or not, as a technologist, you probably have opinions about “The Matrix.” While Neo, Morpheus, Trinity, and the Oracle are important characters throughout the series, one of the most pivotal characters that played a small but underappreciated role was “The Keymaker.”

While all the action from dodging bullets and jumping off of buildings thrilled theatergoers (especially in 1999 when no one had seen CGI imagery like that ever before), it’s easy to forget about the keys and the source code that keep the Matrix… well, a matrix. If they’d just focused their attention on key vulnerabilities, perhaps their epic wouldn’t have taken so many movies to resolve, but what would have been the fun in that?

But what significance did “the keymaker'' actually have in the context of the software development world that fed the Matrix’s lore in 1999? Back then, the most modern large technology companies were using many types of software keys that were critical to the entire monolithic stack built upon them. Best-in-class teams built security and privacy capabilities by carefully weaving together layers and layers of security across users, native applications, 3rd party applications and the backend services that all these software calls were making, and large armies of software engineers were required to build and manage it.

In many ways, the ideas of Zero Trust (a term actually coined the 1994 in the seminal work on formalizing trust) were built into these security architectures, embedded all the way into secure hardware enclaves, and the keymakers whose genius encoded these complex systems were almost mythical in their power and influence, especially for people who went unnoticed except by the few software executives in the know.

The Old World: Virtual Firewalls Meet Military Fortifications

In that monolithic world, critical software applications were completely visible and controlled by the operators. There was “a single network firewall” in front of the datacenter that would create a different virtual presence for all critical application and database servers. Engineers just had to protect this virtual landscape, and two decades later, the majority of current solutions are still designed for that kind of security.

As part of that, there were many network-based security policies that were put in place. If something needed access to sensitive data, they would place that application in a deep subnet, where application requests would go through different network nodes and make sure that access was consistent and trusted. For example, if an application needed to talk to some database, which sat behind a certain hostname, those hostname requests had to go through some network firewall. For instance, the credit card processing database was protected behind a particular firewall, while sensitive health information sat behind another firewall, akin to locked doors within a fortress.

An intruder might make it past the main gate or firewall, but each individual locked door was still meant to make it harder to access everything at once if the fortress had been compromised. Since these monoliths had all “individual” databases living in a scaled out compute and storage farm inside a data center, such layers of multiple firewalls would be laid out within the data center to produce contextual segmentation in a very physical way. Companies were literally segmenting out all these sensitive nodes and layers of applications, following principles that had been used for military fortifications for thousands of years. The whole thing was very tangible, very comprehensible, and absolutely impossible to scale.

The New World: Sailing Fast Over Dark Murky Waters

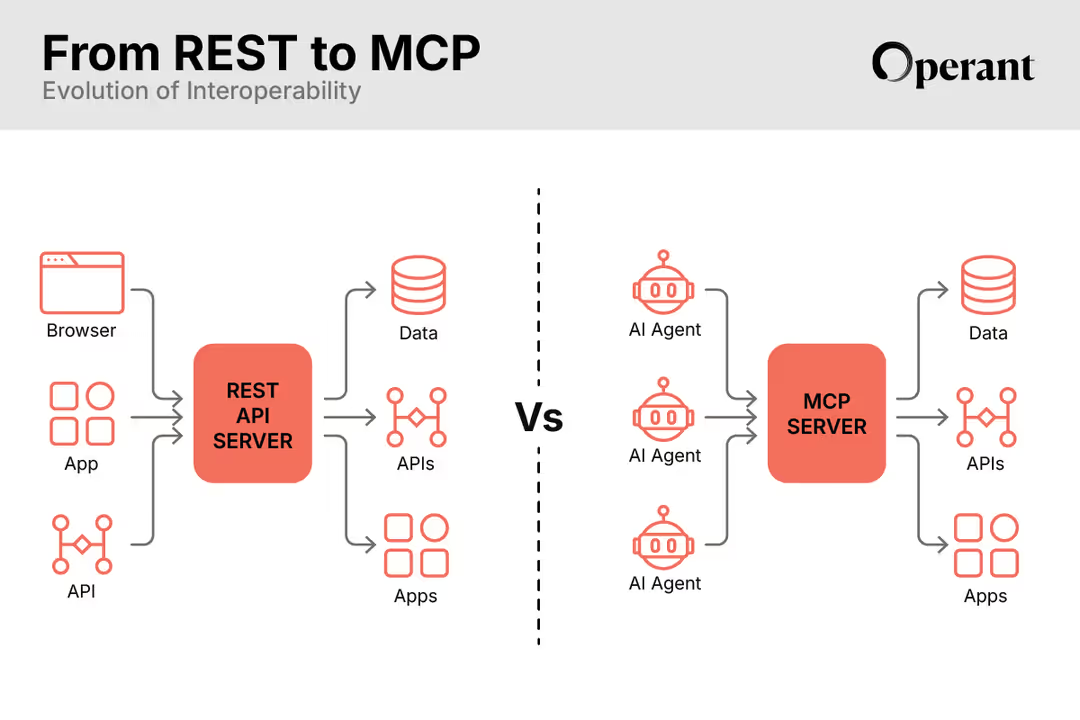

In the new world, everything is in the cloud. You don't have physical control of what database sits behind what firewall. Often, you don't even control that firewall anymore. In such a scenario, you have very limited control over how to protect your credit card database or health information database- all of which are now just an API call away.

Now you are setting up layers and layers of these APIs (instead of firewalls) and you assume that you are only allowing trusted services to call those APIs. For example, you need to do credit card processing, which should be accessible from the checkout service, so, in theory, only the checkout service would be able to query the credit card and then process it. But in the flat world of cloud-native, you don't have control over the network pieces underneath – there are ways that other services and APIs can also call the credit card database directly – and you must move forward, developing and releasing faster than ever, like a packed container ship barreling forward on an endless sea without any control or visibility about what lies beneath the surface of the water.

Now that you can’t actually go into your AWS data center and set up physical segments, you have to find a different way to secure these API calls. Back when you had 1000 virtual machines, you could have used some manual rules or segments around what application talks to what other application, but for this to work, you needed to fully understand and keep documented those interactive relationships. When they were relatively static and manageable in volume, that was a thing you could tackle with a team. Instead, now when you have 1000s of services, which are deployed as millions of containers, you have a much, much larger and more complex system with potential gaps that add extensive risk to your whole application.

Consider the difference between securing a 19th century merchant ship versus a modern container ship carrying hundreds of millions of dollars in cargo. Just this March, a container ship sunk off the Azores Islands after a fire caused cascading failures that were then compounded by rough seas, causing $155 million of cargo to sink to the bottom of the Atlantic. It took vulnerabilities in the containers and ship’s fire containment systems combined with the external ecosystem to cause such a catastrophic failure, any piece of which may have been secured and managed differently if it were operating at a human-manageable scale, but it wasn’t, just as modern cloud stacks aren’t.

Conclusions

We are operating at a scale and complexity unfathomable even twenty years ago, and it is no wonder that the fortifications and keymakers of the old world need to adapt and evolve to keep today’s world safe and secure. While we are not starting from scratch and can take with us many lessons, both technical and humanistic, from what has come before, we also need to fundamentally reinvent our approach to application security so that we are no longer dependent on systems and structures that can’t scale to conquer today’s vast challenges.

3%20%3D(Art)Kubed%20(16%20x%209%20in)%20(7)-p-1080.avif)

.png)