A Brief History of the Cloud: It's Turtles All The Way Down

Evaluate your spending

Imperdiet faucibus ornare quis mus lorem a amet. Pulvinar diam lacinia diam semper ac dignissim tellus dolor purus in nibh pellentesque. Nisl luctus amet in ut ultricies orci faucibus sed euismod suspendisse cum eu massa. Facilisis suspendisse at morbi ut faucibus eget lacus quam nulla vel vestibulum sit vehicula. Nisi nullam sit viverra vitae. Sed consequat semper leo enim nunc.

- Lorem ipsum dolor sit amet consectetur lacus scelerisque sem arcu

- Mauris aliquet faucibus iaculis dui vitae ullamco

- Posuere enim mi pharetra neque proin dic elementum purus

- Eget at suscipit et diam cum. Mi egestas curabitur diam elit

Lower energy costs

Lacus sit dui posuere bibendum aliquet tempus. Amet pellentesque augue non lacus. Arcu tempor lectus elit ullamcorper nunc. Proin euismod ac pellentesque nec id convallis pellentesque semper. Convallis curabitur quam scelerisque cursus pharetra. Nam duis sagittis interdum odio nulla interdum aliquam at. Et varius tempor risus facilisi auctor malesuada diam. Sit viverra enim maecenas mi. Id augue non proin lectus consectetur odio consequat id vestibulum. Ipsum amet neque id augue cras auctor velit eget. Quisque scelerisque sit elit iaculis a.

Have a plan for retirement

Amet pellentesque augue non lacus. Arcu tempor lectus elit ullamcorper nunc. Proin euismod ac pellentesque nec id convallis pellentesque semper. Convallis curabitur quam scelerisque cursus pharetra. Nam duis sagittis interdum odio nulla interdum aliquam at. Et varius tempor risus facilisi auctor malesuada diam. Sit viverra enim maecenas mi. Id augue non proin lectus consectetur odio consequat id vestibulum. Ipsum amet neque id augue cras auctor velit eget.

Plan vacations and meals ahead of time

Massa dui enim fermentum nunc purus viverra suspendisse risus tincidunt pulvinar a aliquam pharetra habitasse ullamcorper sed et egestas imperdiet nisi ultrices eget id. Mi non sed dictumst elementum varius lacus scelerisque et pellentesque at enim et leo. Tortor etiam amet tellus aliquet nunc eros ultrices nunc a ipsum orci integer ipsum a mus. Orci est tellus diam nec faucibus. Sociis pellentesque velit eget convallis pretium morbi vel.

- Lorem ipsum dolor sit amet consectetur vel mi porttitor elementum

- Mauris aliquet faucibus iaculis dui vitae ullamco

- Posuere enim mi pharetra neque proin dic interdum id risus laoreet

- Amet blandit at sit id malesuada ut arcu molestie morbi

Sign up for reward programs

Eget aliquam vivamus congue nam quam dui in. Condimentum proin eu urna eget pellentesque tortor. Gravida pellentesque dignissim nisi mollis magna venenatis adipiscing natoque urna tincidunt eleifend id. Sociis arcu viverra velit ut quam libero ultricies facilisis duis. Montes suscipit ut suscipit quam erat nunc mauris nunc enim. Vel et morbi ornare ullamcorper imperdiet.

Stephen Hawking’s ‘A Brief History of Time’ starts with the following excerpt:

“A well-known scientist (some say it was Bertrand Russell) once gave a public lecture on astronomy. He described how the earth orbits around the sun and how the sun, in turn, orbits around the center of a vast collection of stars called our galaxy. At the end of the lecture, an old lady at the back of the room got up and said: “What you have told us is rubbish. The world is really a flat plate supported on the back of a giant tortoise.” The scientist gave a superior smile before replying, “What is the tortoise standing on?” “You’re very clever, young man, very clever,” said the old lady. “But it’s turtles all the way down!”

While the orbital nature of the solar system is a scientifically established fact, I can’t help but think that the lady in the back of the room was onto something, perhaps in an entirely different context - that of a ‘Brief history of the Cloud’. In this context, an application rests on top of a Virtual Machine that rests on top of a Hypervisor that rests on top of a Server and a fabric of Switches and Storage. If application developers were to consider these layers as abstractions (or… turtles) that are someone else’s problem, could the cloud be just a collection of turtles all the way down that they don’t need to worry about?

While that would have been all nice and dandy, it is far from reality. Carrying this train of thought forward in the real world - If applications rest on top of infrastructure turtles, troubleshooting how apps behave often involves understanding and analyzing the underlying turtles’ behavior (in thorough ways that would make Sir David Attenborough proud). In fact, configuring infrastructure turtles’ behavior remains key to how applications perform, how much they cost, how secure they are, how resilient they are, and how scalable they are.

In the first phase of cloud adoption, most applications were either lifted and shifted to the cloud as is, or were architected as a monolithic application. [ To be clear, this first phase of cloud adoption remains a present reality for many organizations. ]

From an application vs infrastructure perspective, the boundaries in this monolith architecture are more clearly defined - Applications are the processes running inside VMs, while everything else - namely the VMs, VPCs, network subnets, storage volumes, databases etc are infrastructure (“turtles” from the perspective of application development teams).

In this phase of cloud adoption, the definition of what is an application and what is infrastructure is more or less based on which team ends up being responsible for provisioning and operating each layer.

Developers code and test the applications, while infrastructure engineers provision and configure the underlying infrastructure including VMs, networks, storage, databases and their likes, and operate them in ways that would achieve desired application availability, resilience, security and performance goals in line with business goals. If there is a problem, central SRE/Devops teams are responsible for troubleshooting the infrastructure layers, while application developers, fairly unaware of the underlying cloud architecture, troubleshoot problems within their application code. As the cloud and application architecture is relatively static, teams have the internal tribal knowledge about patterns and behaviors manifested in the operational logs and metrics which may be good enough for manually troubleshooting scenarios.

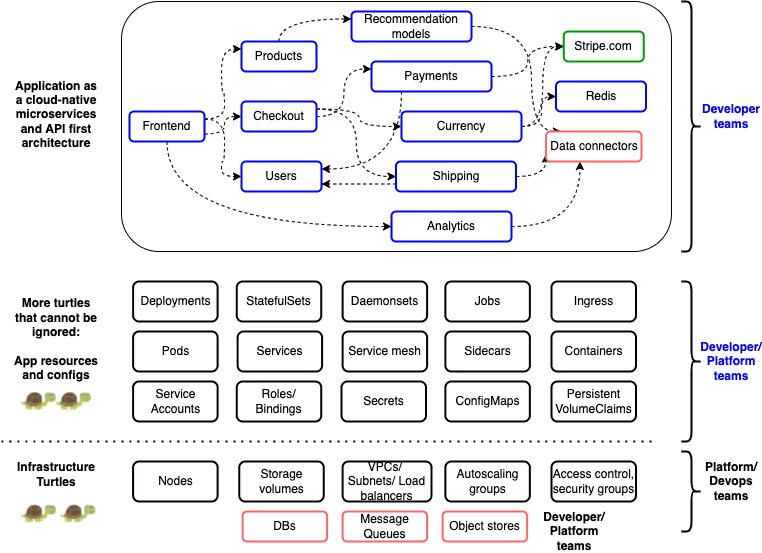

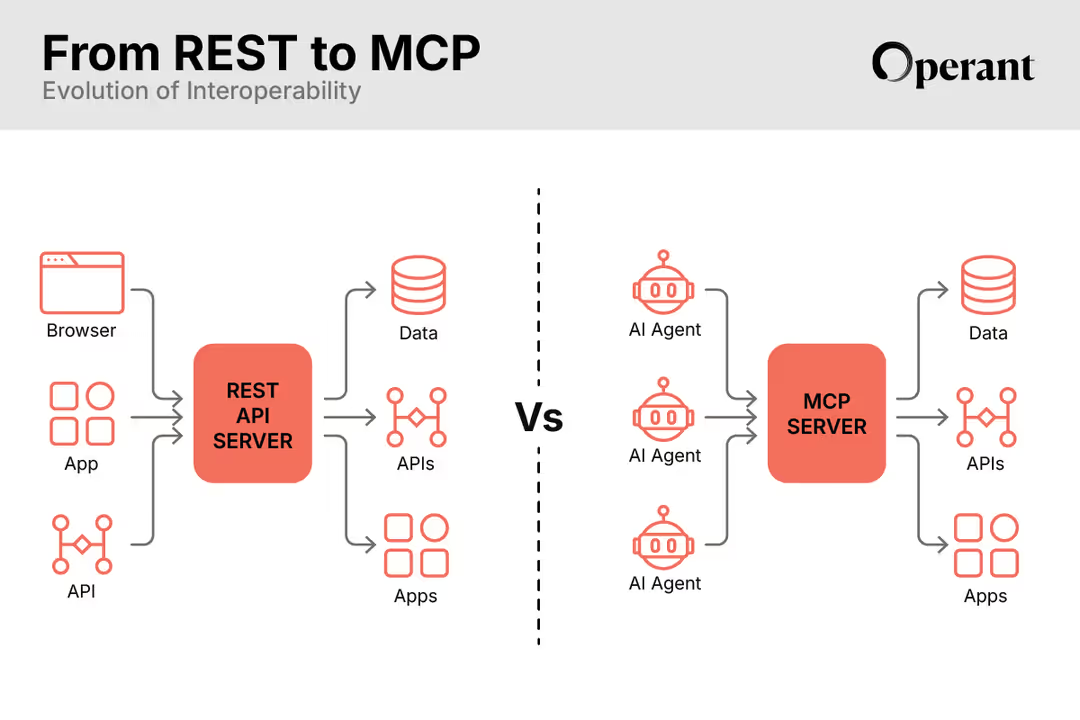

However businesses born today have an ever growing need to accelerate their pace of innovation, enabled by building on top of the cloud since their inception. Enter the second phase of cloud adoption - with microservices, containers, and Kubernetes. In this phase of cloud adoption, teams are either starting to build their apps as cloud-native domain-based micro-services, or breaking apart their monoliths into a bunch of microservices in the hope that this architecture shift will help teams innovate while staying agile and scale the frequency of feature releases to meet the ever evolving needs of their business. Kubernetes has become the de facto operating system or platform of choice for such containerized microservices with its adoption ever-increasing.

While Kubernetes presents many benefits, one of which is adding a layer of abstraction for application developers from underlying cloud infrastructure details, it remains complex to adopt and operate. One of the significant reasons that helps explain this complexity is that the boundaries of defining what is an application and what is infrastructure start to get blurry when applications run on top of Kubernetes.

Kubernetes adds a whole slew of fine-grained abstractions that are more application-centric compared to coarser abstractions like VMs. An application is not only just application code anymore, but also, at the very least, 6 different application resources, namely - Containers, Deployments, Pods, Replicasets, Services, and Ingress. Besides these 6 resources, there are additional resources that define application secrets and environment variables as ConfigMaps and Secrets. Service accounts, Roles and Role bindings define permissions for what an application pod can and cannot do in a shared cluster environment, Sidecars are used for initializing application environments and all other sorts of initialization tasks.

If an application is stateful as opposed to being stateless, there are additional resources like Statefulsets, PersistentVolumeClaims and PersistentVolumes that define the storage attributes for the stateful application. It does not end there. Enter CustomResourceDefinitions - which are extensible resources within Kubernetes that may also influence behavior of an application. As an example, if an organization adopts a service mesh for application traffic visibility and traffic management capabilities, additional resources in the form of Sidecars and CustomResourceDefinitions are added by the service mesh deployment that help tune application traffic behavior.

These resource configurations are more “turtles” that cannot be ignored by application development teams, even though they are not, in a sense, application code. In the Kubernetes world, YAML and JSON raise up their hands saying they are code too and will not be ignored!

Troubleshooting 5xx error codes in Kubernetes applications for application development teams not only involves looking into recent application code changes but also involves trying to detect things like -

- Is my application pod healthy?

- Is my application service healthy?

- What is the pod and service that backs my application?

- Is the Ingress configuration correct and is it routing traffic to my services and pods as expected? Is the container image right or is there a misconfiguration?

- Why is the liveness probe failing?

- Why is the pod not seeing any traffic - is the network sidecar proxy configured correctly?

- The list goes on and on.

This troubleshooting scenario is very different from the previous monolith scenario where there was an assumed separation of concerns between application and infrastructure teams. When it comes to Kubernetes apps, both Developers and Devops/Platform teams need to have a shared understanding of all the moving parts within Kubernetes. Devops/Platform teams need to create the right support hooks and controls within Kubernetes, thus enabling separation of concerns between teams, so that developers can own the troubleshooting of their own apps which includes dealing with Kubernetes resources mapped to their applications.

Keen observers will note that I haven’t even mentioned the cloud infrastructure layers in the above troubleshooting scenario - in the end, it could be that a peering network connection between the Kubernetes and database VPCs went down - in an entirely separate turtle world.

To me, the challenges seen by Kubernetes teams when troubleshooting and operating their applications can be distilled into three classes:

System data and configuration is distributed

Kubernetes is inherently a distributed desired-state driven system. Kubernetes resources like containers, deployments, pods, services and so on are created and mapped to an application, however it is often hard to understand which resources map to what application. Kubernetes uses a label-based mechanism to maintain this mapping, however existing tools today do not carry this context over in easy to understand visualizations that can help discover this distributed mapping. Applications are also not monoliths anymore - they are a bunch of services, 3rd party APIs, and data connectors that together encapsulate an application. Imagine how the number of containers, deployments, pods and other resource configurations to be tracked starts to scale as the number of services and APIs in an application grows. In order to find where the problem is, the first hurdle to cross is - which Kubernetes resource is the one that a developer should be looking into from amongst hundreds of distributed resources? This exercise alone often takes many hours of engineering time.

System data and configuration is ephemeral

Some resources that Kubernetes creates when an application is deployed may not live as long as certain other more permanent resources. Pods are recreated with different names when the containers or configurations within them are updated. Kubernetes Events that are valuable sources of information for things such as a pod’s health expire after an hour at which point that data is no longer available to analyze for engineering teams. If this important context of why pods were restarted and recreated at 2 am in the night is somehow not preserved in an external place, the data disappears and is unavailable for analysis. The pace of change in Kubernetes applications far exceeds that of monoliths which makes the system-level and application-level operational data and context change rates hard to cope with manually.

Taking action is hard

Kubernetes makes the process of letting application developers define desired application behavior more explicit and programmable. This means that all aspects of an application behavior - such as it’s security, lifecycle management, CPU and memory resourcing, scaling, health-checks, load balancing, traffic behavior are programmable within a Kubernetes application definition via its many resources like pods, services, and so on. This is a very important distinction from previous VM based platforms, where developers did not have a say in things like - sizing VMs, or how VM traffic was routed, or how DB connections were load-balanced.

While the Kubernetes model of making all system configuration application-centric and desired state driven offers many benefits, it also means that someone has to define those requirements and resources in ways that are correct, consistent across application versions, and optimal. The process of configuring these resources as YAML and JSON remains extremely manual and prone to sprawl, lack of clear ownership between Developer and Devops teams, misconfigurations and even, unknown sub-optimal configurations.

Despite these challenges, I am excited about this new layer of configurable Kubernetes abstractions that helps bridge the gap between applications and infrastructure and that help bring Developer and Devops teams closer by introducing a common, new, even if complex, vocabulary based on Kubernetes resources.

At Operant, we are working on fundamentally new solutions that simplify troubleshooting and commanding Kubernetes native applications. If the cloud and Kubernetes are indeed turtles all the way down, wouldn’t it be nice to get to know them a bit better and understand how to train them in simplified ways so they can help us be fast and innovative, while being resilient, scalable, and secure in a cloud-native world?

3%20%3D(Art)Kubed%20(16%20x%209%20in)%20(7)-p-1080.avif)

.png)